After years of futzing about w/ workarounds and hacks, the new ParseJSON() function in Power Apps has given us a glimpse of how this will work for us going forward. The ability to push data out to other applications has been possible for awhile now, but our ability to consume JSON back into Power Apps easily has eluded most app builders.

While this function is still in the Experimental branch of PowerApps, there is no doubt that some form of this will go forward. The benefits are massive and this changes everything about how we deploy large-scale applications.

|

| Not to mention the possible security benefits |

I did say that I'd eventually get around to writing this in my last post. Now I have.

What is JSON?

Pronounced JAY-sahn or JAYSUHN depending upon who you are talking to, this is just a manner of structuring data so we can pass it back/forth between systems. Much like the classic comma-delimited CSV files used for decades, it is just a way for us to more easily export data from one platform and import it into another.

XML became dominant for a time and remains so for certain use-cases and industries. YAML has found traction as well within some areas. However, JSON has emerged as the dominant method for sharing data across the web especially when we're talking about a single transaction.

The format starts out simple in what is referred to as "name/value pairs", but can grow to be quite complex.

"name": "John",

"IQ": 20

}

In the above example, there are two variables. One named name and the other IQ. The values are a text string John and a number 20.

Just know that you can scale this to send/return multiple records, you can send dynamic data fields (the field names don't have to all match or be identical for all records), you can nest records (e.g. multiple phone numbers for a single entry), etc.

The important thing for you to know though is that being able to ingest this data source within Power Apps, means that you can now consume data directly from an array of systems that previously required some kind of translation or middleware.

Again, the entire web is JSON at this point w/ data being thrown all over the place using this model data structure. The value of being able to consume/create this from within PowerApps and turn it into Collections for our use cannot be overstated.

Basic Setup

The most common (but not the only) use-case is to use this to pass lots of data back from Power Automate in a single string. If you want, you can put the results of ParseJSON() into a variable immediately using the Set() command:

However, everything here is now treated as an "untyped" variable until we manually "type cast" these items into something specific (even though JSON itself is just a long string). There is simply no way to view what tempUntypedDataTable contains at this point.

NOTE: I've updated the below section to make this easier to troubleshoot so you can see what is returned before you run the ParseJSON() function. You cannot see what is returned from the ParseJSON() step until it is mapped, so I find it easier to put this into a simple string first and then do the ParseJSON() later.

So again, if you are retrieving data from Power Automate, it might be better to not immediately use the ParseJSON() command on what is returned. If we instead took the above as a string:

In this scenario, you can look at the literal string first (tempString) to make sure you've retrieved what you think.

The second string is "untyped" and you cannot look at it. So really, keeping things in the first state is best and only use the ParseJSON() right when you need to (see below).

Primary Use case: Returning Multiple Records from Power Automate

While you can call anything and return some JSON from it to parse/consume and turn into a Collection, the most likely use-case for initial deployments is to call Power Automate to return multiple records from SharePoint (or anywhere really) and send it back to PowerApps.

If we called SharePoint directly from PowerApps, then all of the records returned go right into a Collection:

ClearCollect(myCollection, mySharePointList);

We don't have to do anything special because SharePoint already put these variables into "types" (i.e. String, Number, Date, etc.) for us. However, when we're dealing w/ ParseJSON (at least today in its pre-release state), we have to typecast each item and tell PowerApps what kind of variable it is.

NOTE: As this is so simple already, you might ask why do it this different way. This is why.

Let's take my original example from above:

This is calling a Power Automate flow named powerAutomateAppName, sending it a variable named someString, then returning a value from Power Automate named returnString, and putting this into a variable named tempString.

Let's assume we're querying SharePoint through Power Automate to return 3 discrete values (one string, one date-time, one number) from one or more records by using the following:

While this is an example, your own code will be very similar. So let's look at what it is doing:

- Get our JSON blob as a String from Power Automate

- Clear our collection that we're going to populate w/ records

- Convert the string into an untyped variable (ParseJSON) into a Table

- Go through each record and convert each individual value into the correct type and assign it to the appropriate column in our Collection

In the example above, we're saying that the JSON value named SPItem1 (note the lack of spaces) is a Text value and assigning it to the column 'SP Item 1'. SPItem2 is typecast into a DateTimeValue and put into 'SP Item2'. Finally, SPItem3 is converted into a number and put into 'SP Item 3'.

NOTE: In my example, if you had SharePoint list items named w/ spaces as I have above ('SP Item1', 'SP Item 2', SP Item 3'), then this is LIKELY how it all would come across when converted to JSON within Power Automate. It strips the spaces and merged it all into a non-spaced string. So me putting these back into a Collection that has spaces in the names would reflect how it would appear if we had pulled this directly from SharePoint (and allow us to write back to SP at some later time more easily. However, you should always look at the raw JSON from tempString to ensure the values are correct as sometimes they will be inside single quotes like they were originally).

At this point, there is no difference between our Collection (allRecordsFromSharePoint) we created here vs. any other method of creation. It's just a normal Collection at this point.

Now for Power Automate

|

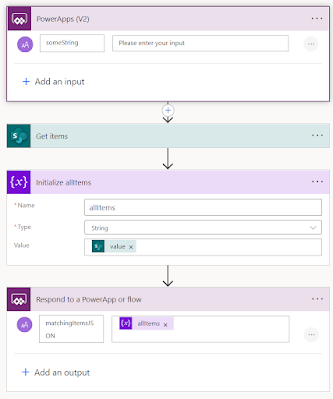

| Note that I'm using PowerApps(V2) to start |

This takes a single variable from our PowerApp (although you could take in multiple), uses it to build out the query from SharePoint, initializes a string with the VALUE (not the BODY) returned from the SharePoint - Get Items step, then returns that string to the calling PowerApp.

That's it.

Seriously.

I'm not kidding.

Save it and get out of there before you break something.

Just a Few Tips

Naming

Again, SharePoint values w/ spaces in them may be removed when you use the VALUE returned from a Get Items step in Power Automate. If you're confused about exactly what the individual columns are named in your JSON bundle then run a test through your Power Automate flow and look at the values that your variables hold:

This gets you to a point where you can check names for fields/columns and make sure they are what you think they are and name them correctly inside your app.

You Can Ignore Values

Meaning if you are returning many more values than you care about (like SharePoint Modified/Created/etc. fields), then you can only ingest the ones you want.

You Can Create Non-Existent Values

If your data may or may not include a specific column name (different queries based upon what you're sending), then it will still allow you to type cast a non-existent value into your Collection. This is really quite huge as it allows you to have more dynamic data queries (but you still need to define them within Power Apps - baby steps).

Microservices!

As we all try to pivot to a more modern design for much of our code, chunking things up into microservices helps us in dividing the labor across different labor pools that know their area better. Building this w/ JSON entities makes it clear that each chunk of the chain is fully our responsibility for dealing with. It limits some of the patterns of behavior where we are so very reliant on others to do part of the work in our series of tasks. When we receive/pass JSON entities, it is a little clearer that it is on our shoulders to do the work to verify that we're getting what we expect.

I just had a conversation w/ someone internally who had a "more complex than necessary" Power Automate script that could solve most problems simply by chopping it into several smaller Power Automate scripts that were specific to the task instead of just a "do all the automation for me at once" kind of a design.

JSON helps reinforce this behavior as it is much easier to understand each blob of JSON essentially is tied to a specific module/task.

Troubleshooting

With the new Power Automate interface, you *might* run into some trouble w/ using it and attempting to get this to work. If everything looks good, then switch back to the classic view and test again. You're welcome.

You can do the same process using an Excel table vs. Sharepoint, but you are more likely run into the "illegal naming" problem that can come up (if only because it is more likely someone else controls that Excel sheet and might do something crazy). I found Natraj's post here to be a good idea for using the Select step in PowerAutomate to rename fields. This can let you change names on name/value pairs before you attempt to send them over to PowerApps.

Final Thoughts

As I mentioned at the beginning, this is huge door swinging open for us.

While I don't think you should immediately go and rewrite all of your PowerApps to function this way, it really will change how we do row-level security as well as how we treat semi-structured data in the enterprise. Wasting time structuring SharePoint lists out w/ data types that are still very TBD can be quite annoying. Being able to dump a big Collection out into a single field could be a very good interim step toward you building out a longer-term application.

As we slowly agree on how to structure data over time, the SharePoint list (or whatever) can take shape and become more optimized. However, the application can still be delivered immediately w/ limited risk or work required in building out the full data source.

This really does mean that we are much closer now to no longer even being tethered to SharePoint or Dataverse.

I'm about as giddy as a crusty old technologist gets.

No comments:

Post a Comment

Because some d-bag is throwing 'bot posts at my blog I've turned on full Moderation. If your comment doesn't show up immediately then that's why.

DIAF Visualpath team